Voices from AI

About artwork

Provenance

Tech info

About

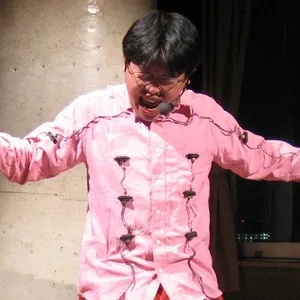

Voices from AI in Experimental Improvisation by Tomomi Adachi, Andreas Dzialocha, Marcello Lussana The project, Voices from AI in Experimental Improvisation, consists of a research and a performance on the use of Artificial intelligence (AI) in the context of experimental music and improvisation, using some of the latest technology, namely and an algorithm called Recurrent neural network (RNN). We built an AI system named “Tomomibot” able to react with Adachi’s voice improvisation in realtime. The AI drives voice samples which are generated by RNN from 10 hour Adachi’s voice improvisation recording as source materials, as well as the raw recordings. The AI analyzes how Adachi reacts to sounds from other performers using Mel-frequency cepstrum algorithm, which is widely used in speech recognition, then learn the improvisation and makes improvisation models. The models are used for predictions of how Adachi improvises. During the performance, the AI receives the Adachi’s voice improvisation as an input through the same Mel-frequency cepstrum algorithm, then it plays a voice sample similar to what Adachi will play as the next sound event. Conceptually, the performance is a duo by Adachi (human) and Adachi (AI), the combination is never possible without AI. But, they do not play at the same manner of course. While humans try to improvise escaping from the predefined prediction, AI’s understanding of music is not the same as in humans. As a further improvement of the system, Adachi records how he reacted to the AI for each session, then AI learns the recording again and updates the improvisation model. At the same time, Adachi tried to understand and sometimes imitates the way of the improvisation of AI, this process is mutual between human and AI. Playlist https://youtube.com/playlist?list=PLKuHzp40Ex3Cdt1yyQAumymkmo57PYfcd&