Embedded/Embodied

About artwork

Provenance

Tech info

About

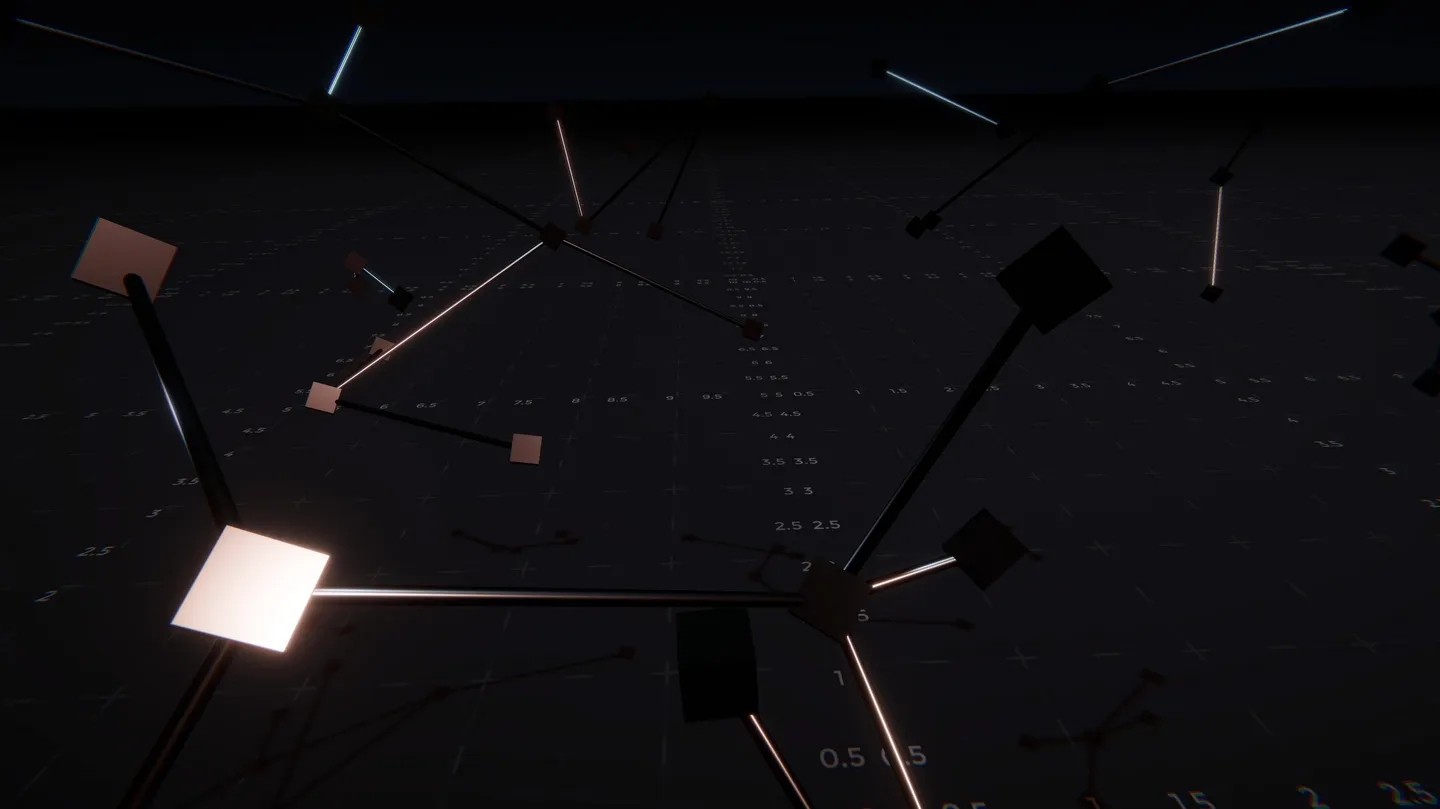

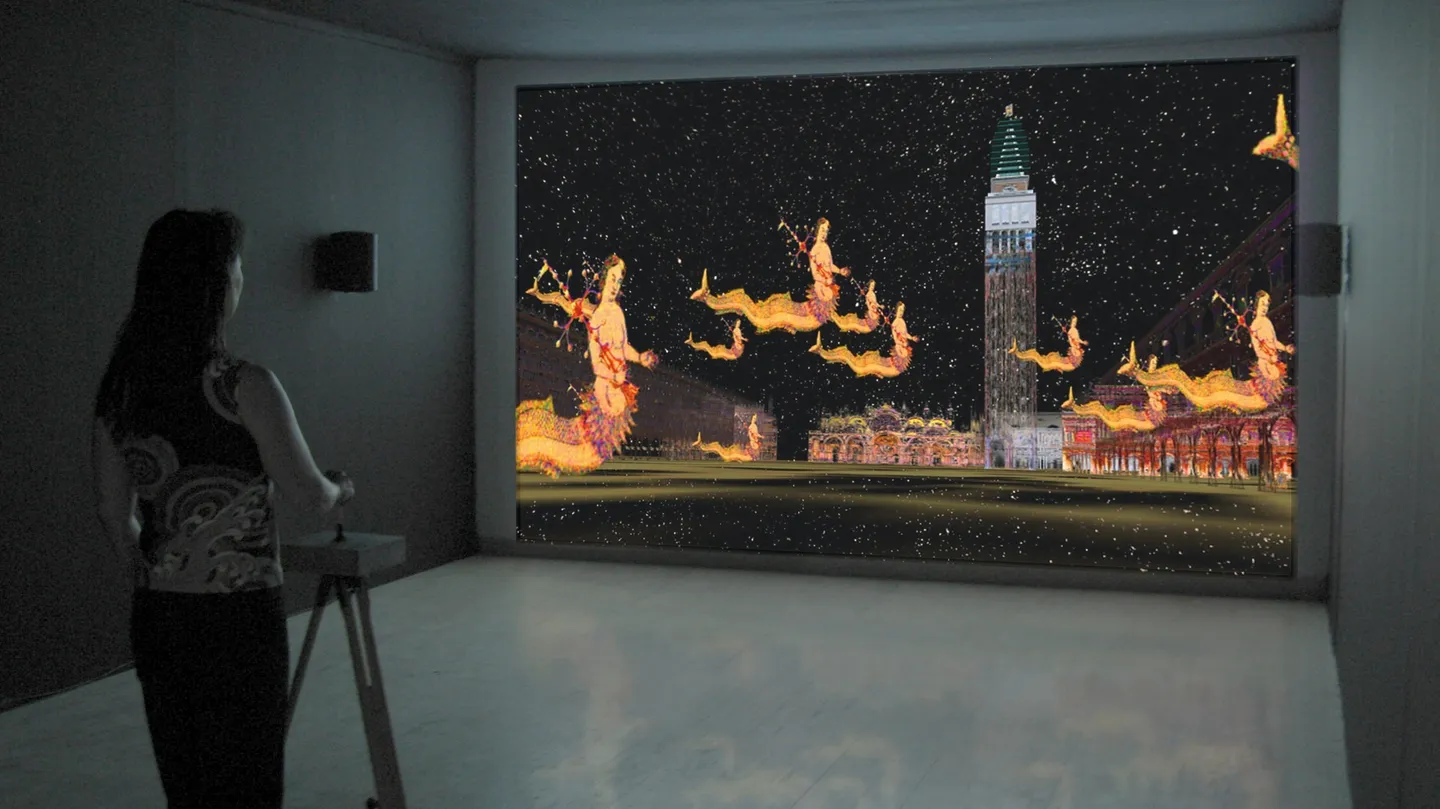

A concept uniting ‘acoustics’ and ‘epistemology’, acoustemology investigates sound as a means of obtaining knowledge, delving into what can be known through listening. Unlike the analytical, reductionist, and quantitative methods, Steven Feld's acoustemology is not preoccupied with evaluation but with the lived experience of sound. Within this paradigm, Embedded/Embodied is an interactive installation and a digital sound walk that explores AI sound recognition, generation, and communication through a situated and reflexive method. It speculates on the potential machine learning approaches that can go beyond conventional quantitative and generalized methods and incorporate cultural, ecological, and cosmological diversity through a lived and locative methodology. It also taps into the epistemological possibility of using Augmented Reality as a situation for developing a relational form of sonic knowledge through the interplay between hearing and the other senses by territorializing computational sonic investigations within the visual field. When we say an AI agent ‘listens’ to its surroundings, what do we mean? How can it ‘know’ a physical space? How does the knowledge it gains through listening differ from the way humans know spaces through sound, and is there a way for us to imagine the AI agent’s experience of that space? Embedded/Embodied Virtual Sound walk is a web-based experience utilizing spatial audio, point-cloud captures, and navigational interactions. It was developed by training and exploring the Embedded/Embodied system based on the audio recordings from Het HEM space that is available on The Couch Platform. The aim was to create an interactive documentation of this artistic research project in which the system's learning epochs can be explored in a spatiotemporal manner. It is an archival diary of the system’s situated interactions with the local environment.